Research Activities in Wearable & Pervasive Computing

Below you can find a summary of my research activities organized

topically. This work has been carried out mostly during my PostDoc

years at the Wearable Computing

Laboratory

at ETH Zürich, with topical collaborations with other institutes.This list is sporadically updated - for more up-to-date information, check my publications page.

Objectives

My activities center on context awareness - especially human activity and gesture recognition - in wearable and pervasive computing, with emphasis on novel adaptive and learning algorithms, and multi-sensor correlation and fusion, targetting miniature embedded platforms.My activities are guided by the following questions:

- What frameworks and programming models allow for efficient distributed context recognition?

- How to exploit in a scalable and robust manner sensors for context awareness in open-ended environments?

- What are the new applications arising from sensing on a large scale?

- What are promising new sensor technologies?

I co-initiated and coordinate the EU FP7 FET-Open project OPPORTUNITY, where we investigate novel methods for context-awareness in opportunistic sensors configurations. I am active in the EU FP7 FET-Proactive project SOCIONICAL, where I am interested in the machine understanding of crowds by means of sensor networks and complexity science models.

Activity Recognition in

Opportunistic Sensor Configurations [2009-]

Nowdays a large number of sensors are readily available on the body, in

objects carried by the user (watches, cellphones), and in the

environment. Thus, rather than thinking at which sensor modality to

deploy and where - the dominant approach until now - the question

rather becomes

how to exploit the information of multiple already deployed sensors for

context awareness. We

call this context recognition in

opportunistic sensor configurations.Activity recognition in opportunistic sensor configurations requires to rethink the activity recognition chain, to make it more modular, more flexible, and more adaptative. Most of this work is carried out within the EU FP7 FET-Open project OPPORTUNITY.

|

Activity recognition in opportunistic sensor configurations: objectives and approachI make the case for activity recognition to move from statically defined sensor configurations towards an opportunistic use of resources that are available on and around the user.The wide availability of sensors in our living environment, in objects and soon in our clothing makes this (or will soon make this) a This leads to a rethinking of the traditional activity recognition chain. I outline research directions to improve the activity recognition chain, in these papers that are essentially a summary of the objectives of the EU FP7 FET-Open project OPPORTUNITY. This work is done within the EU FP7 FET-Open project OPPORTUNITY. |

|

|

A reference dataset for opportunistic activity recognitionIn order to develop and benchmark activity recognition algorithms for activity recognition in opportunistic sensor configurations we collected a large dataset of complex activities in highly rich sensor environments. Thus, opportunistic sensor configurations can be investigated and compared through simulations.We deployed 15 wireless and wired networked sensor systems comprising 72 sensors of 10 modalities - in the environment, in objects, and on the body. We acquired data from 12 subjects performing morning activities, yielding over 25 hours of sensor data. Over 11000 and 17000 object and environment interactions occured. |

|

|

Ensemble classifiers for

scalable performance and robustness to faults

In order to exploit a large number of sensors (on body, in objects, and

in the environment) - possibly of different modalities - we rely on

ensemble classifiers where the decision of classifiers operating on

individual sensor nodes are combined in an overall decision about the

user's activities or gestures. |

|

|

Network-level power-performance trade-off in activity

recognition by dynamic sensor selection

Based on previous insights on scalable performance offered by using

ensemble classifiers on multiple on-body sensors, we developed

power-performance management mechanism that allows to dynamically

(run-time) adjust the recognition accuracy of an activity recognition

system and |

|

|

Unsupervised classifier self-calibrationWe developed a new online unsupervised classifier self-calibration algorithm. Upon re-occurring context occurrences, the self-calibration algorithm adjusts the decision boundaries through online learning to better reflect the classes statistics, effectively allowing to track and adjust when classes drift in the feature space. We applied this method to the problem of activity recognition despite changes in on-body sensor placement. This leads to changes in the class distribution in the feature space, something which usually adversely affects activity recognition systems. We showed that unsupervised classifier self-calibration can provides robustness against moderate displacement of sensors, such as those occuring when doing physical activities or wearing sensors over extended periods of time. This work was done together with Kilian Förster and Gerhard Tröster within the EU FP7 FET-Open project OPPORTUNITY. |

|

|

Online user-adaptive gesture recognition using brain-decoded

signals

|

|

|

Unsupervised lifelong adaptation in activity recognition

systems

|

Social context - Sensing collective behavior [2009-]

Nowadays almost every person has a cellphone equipped with

sensors. Sensing on such a massive scales opens up avenues for new

applications.Understanding human collective behavior is a challenging multidisciplinary problem with a large number of applications: assistance in emergency and disaster scenarios, urban space planning, as well as smart-traffic management.

Motivated by these possibilities, we investigate how to recognize human collective behaviors from sensors currently included in mobile phones, as well as additional modalities available in our research platforms. We also consider the new possibilities to provide smart assistance based on human collective behavior sensing, e.g. in an emergency situation.

This work is conducted within the EU FP7 FET-Proactive project SOCIONICAL.

|

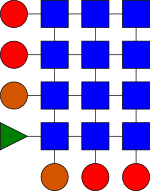

Decentralized Detection of Group Formations from Wearable

Acceleration Sensors

Among collective behaviors, the formation of groups of people walking

together is a natural occurence. Adressing a group of persons as a

whole - rather than sending messages to individual persons - may become

extremely valuable in emergency situations, for instance to direct a

group to a specific exit. |

Cognitive-Affective Context Recognition

Special attention has been placed in the community on the recognition

of human behavior, gestures, or location as an important aspect of

context. However, the user's contextual dimension encompasses also

cognitive-affective aspects, pioneered by Rosalind Picard.  |

Effect of physical activity on the electrodermal activity

after a startle event [2008]

Electrodermal activity is commonly used to infer affective aspects such

as fear or stress. This signal is however challenging to interpret in

daily life situations, in particular due to the co-influences of the

physical activity that may lead to variability in electrode skin

contact pressure or sweating. |

|

|

Cognitive

awareness from eye movements [2009-]

Eye movements have the particularity of being consciouly controlled as

well as unconsciously controlled. Unconscious eye movements are

controlled by the oculomotor-plant. These eye movements serve to build

a mental map of the environment, and are affected by the

situation of the subject, his activities, but also his cognitive

state, such as his proficiency at carrying out a task, or his previous

exposure to a situation. |

Context processing in sensor networks

Programming sensor nodes for distributed activity recognition is challenging when a large number of possibly heterogeneous sensors are involved. We investigate ways to reduce the complexity of describing and executing context recognition algorithms in a distributed manner on sensor nodes. |

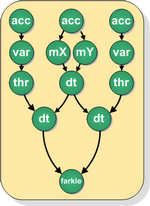

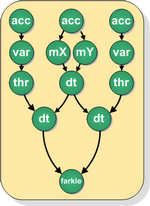

Programming model & execution engine: Tiny Task Network

(TITAN) [2005-2009]

We developed TITAN, a programming model and execution engine to

simplify the distributed execution of context recognition algorithms in

sensor networks. A context recognition algorithm is represented as an

interconnected service graph. Each service can run on different nodes

and the communication between nodes (or within a node) is handled

transparently. |

|

|

Service discovery, composition and system modeling [2005-2009]

Based on the TITAN framework, we showed how complex services can be

composed from simpler ones that are discovered at run-time. We

presented a modeling approach to tehBased on the TITAN system, we |

Activity recognition

Activity recognition is an important aspect of context. We

investigate methods and sensor modalities supporting activity

recognition. |

Activity spotting using string matching [2005-2008]

Spotting complex gestures of a person while it performs

work-related or daily activities remains challenging. Several methods

have been proposed in the community to detect specific time series

within a continuous stream of data - however to date this remains a

very challenging problem. |

|

|

Event-based activity recognition [2005-2006]

|

|

|

Activity recognition and HCI from eye movements [2006-]

|

Bio-inspired techniques in human activity recognition

Bio-inspired techniques - mimicking basic biological behaviors such as learning, evolution or development - tend to achieve high robustness in adverse conditions and have great capacity to exploit at best to their operating environment. These properties may be advantageous in dynamic open-ended environments. |

Evolving Continuous Time Recurrent Neural Networks as Signal

Predictors for Gesture Recognition [2007]

Continuous time recurrent neural networks are capable of complex

temporal dynamic processing. We show that CTRNNs can be evoled to be

signal (time series) predictor for motion sensor data, and eventually

be used for gesture recognition. |

|

|

Evolving robust discriminative features for gesture

recognition with genetic programming [2009]

The performance of classifiers is ultimately limited by the

discriminative power of the underlying features. Features are usually

from related work, or domain-specific knowledge. Yet, ultimately, the

space of possible features are limited to those conceivable by the

designer. |

Gait analysis

Gait analysis is an important topic in the biomedical community, be it to infer the onset of degenerative illnesses or assess the progress of rehabilitation. With a different purpose, gait analysis is also promising in the wearable and pervasive computing comunity: gait is a promising carrier of contextual information, it is is omnipresent, and it can be sensed from ubobtrusive sensors integrated in floors or shoes. We investigate what kind of contextual information that may be inferred from gait, which new applications they allow, and outline the challenges and limitations. |

Gait-based authentication: promises and challenges [2009]

Template-based approaches using

acceleration

signals have been proposed for gait-based biometric authentication. In

daily

life a number of real-world factors affect the users’ gait and we

investigate their effects on authentication performance. |

|

|

Preventing back-pain: detection of on-body load placement

from gait alterations [2009]

Back problems are often the

result of

carrying loads in inappropriate manners: too heavy loads, carried

during

extended period of time, or inappropriately placed on body. Load does

however also influence our gait, which opens up a possibility to detect

unobtrusively on-body load and load placement from sensors

unobtrusively integrated in shoes or garments. |

Localization

Most localization techniques in wearable/pervasive computing use radio

signals (GPS, GSM fingerprinting, etc) or dead-reckoning integrating

user's acceleration. We proposed and characterized a few alternate

approaches. |

Vision-based dead-recoking [2007]

We investigated a dead-reckoning system for wearable computing that

allows to track the user’s trajectory in an unknown and

non-instrumented environment by integrating the optical flow. Only a

single inexpensive camera worn on the body is required, which may be

reused for other purposes such as HCI. |

Healthcare applications

|

Wearable assistant for patients with Parkinson disease and

Freezing of Gait syndrome [2008-2009]

Freezing of gait (FOG) is one common impaired motor skill in advanced

Parkinson disease patients. It typically manifests as a sudden and

transient inability to move. FOG is associated with substantial social

and clinical consequences for patients: it is a common cause of falls,

it interferes |

Technical Platforms

To foster user acceptance wearable sensors should be small, light, and unobtrusive. They also should be easy to deploy and interface and robust, to acquire high quality data with little technical setup overhead. A wearable computer is usually desired to collect data from the sensors, run context-recognition algorithms, and interface with the user. We developed several platforms to support our research activities. |

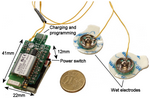

Miniature networked SensorButtons [-2005]

We developed a wearable platform that addresses miniaturization and

low-power objectives: it is a miniature networked SensorButton with the

form factor of a button, so that it can be integrated in garments in an

unobtrusive way. It has several sensor modalities useful in wearable

computing, on-board processing power, and a wireless link for sensor

networking or communication with a base station. |

|

|

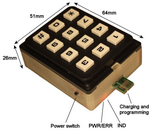

Daphnet wearable computer [2006-2009]

We developed a platform for combined physiological signal acquisition

and context awareness, targeting medical application. It is wearable,

has extended battery life, offers enough computational power to run

context-recognition algorithms and is flexible so that a variable

number of sensors can be added to it (an internal bay allows for custom

input/output extension boards). The core of the platform is an Intel

XScale PXA270 processor running a Linux 2.6 operating system. |

|

|

"Nanotera" Bluetooth Sensors [2006-]

I developed a number of hardware components for the acquisition of data

and the rapid prototyping of context-aware application. This "kit" of

sensors comprises:

|

|

|

Wearable electrooculography [2006-]

We have investigated technological solutions to sense eye movements

using electro-oculography while minimizing user disturbance, by

integrating sensing and electronics into the frame of goggles. We have

shown that it is possible to recognize a number of user activities by

means of eye movement using machine learning techniques. |

|

|

Technology for the Rapid Propotyping of Smart Shirts

[2006-2009]

Activity and gesture recognition requires the on-body placement of a

number of sensors. In order to simplify the deployment of sensors on

body (e.g. during data acquisition sessions in the lab, or trials with

patients) a solution integrated into clothing is desirable. |